#63

Microsoft Recalled, AI = Apple Intelligence, Google accepts fumbling, SD3, GPT2 in 4hrs, Base Smart Wallets, ShadeMap, Univer, Memecoins, SLS bills, Dyson sphere, SpaceTop, Solo Dev Motivation

👋🏻 Welcome to the 63rd!

We didn't forget it; we were just stuck in some chores and a nail-biting Ind-Pak match. We apologize for the delay and appreciate your patience. Now, without further ado, let's dive into this week's updates!

📰 Read #63 on Substack for the best formatting

🎧 Podcast version of this edition is available here → #63 | Recast

What’s happening 📰

⏮️ After getting both love and privacy concerns from users and devs across the board, Microsoft finally made Recall an opt-in feature (Recall is their screenshot-of-your-every-move feature, which is supposed to get shipped in their new Copilot+ PCs, it’s like Rewind but native).

🍎 Apple is calling their smart switch from on-device to cloud AI 🥁 “Apple Intelligence” across all their devices, to be revealed in WWDC on 10th June (neat. not sure if intentional but dropping the artificial is a good move to keep users from being alarmed).

😳 Almighty Karpathy dropped a 4 hours video last night “Let’s reproduce GPT-2 (124M)” (guess a lot of our weekdays are sorted now)

✨ AGI Digest

📦 Product Feature Updates:

🍪 Nvidia announced NIM on Huggingface Inference Endpoints. NVIDIA NIM is inference microservices that provide models as optimized containers, with Llama3-8B serving at upwards of 9k tokens/sec. It currently supports Llama3-8B and 70b with support for Mixtral 8x22B, Phi-3, and Gemma coming soon.

📞 Functional Calling is now generally available across all Claude 3 models on the Anthropic Messages API, Amazon Bedrock, and Google Cloud's Vertex AI. Function Calling allows models to perform tasks, manipulate data, and provide more dynamic and accurate responses by calling appropriate external tools and APIs.

🍕 Google acknowledged that they fumbled with their AI Overview and are slowly rolling it back with improvements (To be fair, it was mainly sarcastic / troll-y content in user forums that led to this). So what did they change this time? Just better guardrails for detecting nonsensical search terms by users, as well as limiting user-generated content from retrieved search results.

⚓️ Model and Dataset Drops:

2️⃣ The QwenLM team released the multilingual Qwen 2 with 5 models in the sizes of 0.5B, 1.5B, 7B, 57B-A14B (MoE), and 72B. The 7B and 72B have a context length of 128K while other models support 32K. Though on paper the 72B scores higher than Llama3-70B, all human-rated leaderboards prefer Llama3-70B over it (Great reminder to not trust benchmarks blindly1).

🏋️ StabilityAI will release the SD3 Medium Weights on June 12. Get access using this waitlist form. Meanwhile, they also open-sourced Stable Audio Open (also available for inference on fal.ai). It can generate up to 47 seconds of samples and sound effects such as drum beats, instrument riffs, ambient sounds, foley, and production elements.

🍷 Huggingface released FineWeb, a (15-trillion tokens, 44TB disk space) dataset for LLM pretraining. It is derived from 96 CommonCrawl snapshots and in their tests produces better-performing LLMs than other open pretraining datasets.

📮 New Leaderboards and Benchmarks:

🏆 Allen Institute of AI released the WildBench V2 benchmark with better scoring metrics, length bias mitigation, harder prompts, and better comparisons across all models. Findings?

GPT-4 (o & t) is the undisputed king, followed by Claude Opus or Gemini 1.5 Pro.

Llama-3-70B still has the best open-source performance but you can DeepSeekV2 and Qwen2-72B occasionally come close. Though interestingly, it shows that Qwen2-72B is not too much of an improvement over Qwen1.5-72B.

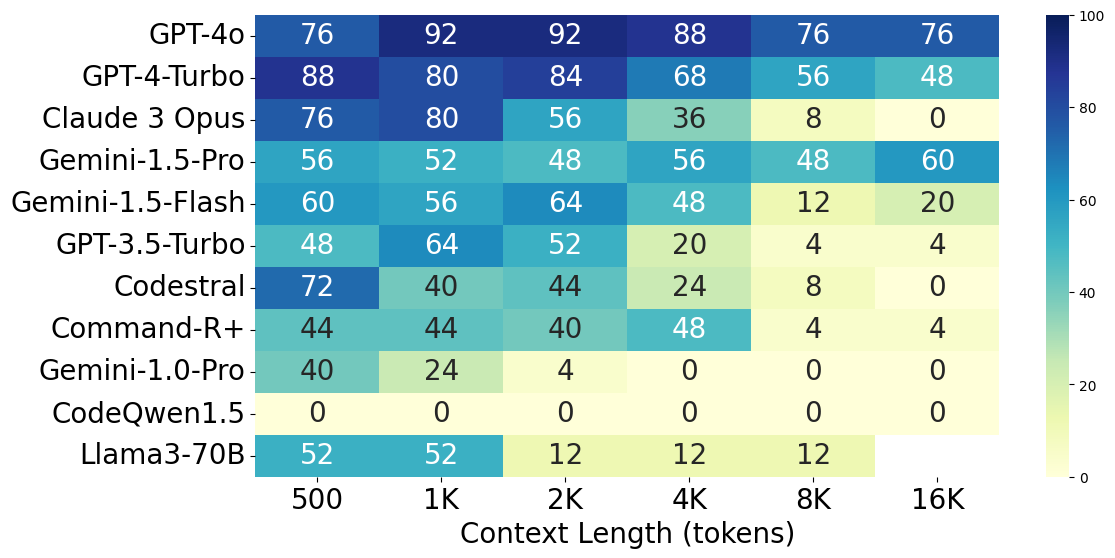

🐞 Bug In The Code Stack is a new benchmark for measuring LLM's capability to detect bugs in large codebases. The results are interesting for this one because the context length changes the game completely for some really good models. Look for yourself below!

🎁 Miscellaneous

🎛️ Mistral is on a roll for the last few weeks! This time they introduced a new Finetune Endpoint on their platform as well as a mistral-finetune OSS library for fine-tuning their open-weight models yourself. And to make sure people use this, they’re organizing a Fine-tuning Hackathon till the 30th of this month, with €2500 in API credits being awarded to the top 3 winners each.

📹 Chinese tech giant and TikTok competitor Kuaishou Technology recently demonstrated Kling, a text-to-video tool that gives a really tough competition to OpenAI’s SORA. While it’s not open-sourced, you might get access to it by downloading their app. Plus it can also use a picture and a motion-capture clip to generate videos with specific movements as well.

🔐 0x Digest

🔐 Fhenix raised $15M in Series A funding and released our initial testnet: Helium.

They have added special precompiles to the EVM that allow computations on encrypted data without the need for decryption, allowing users to write smart contracts with familiar syntax while leveraging the capabilities of FHE.💳 Coinbase launched self-custodial smart wallets. Instead of seed phrases, you can create your smart wallets using Passkeys. This can be a big game changer and become a fast way to get on-boarded to web3 and Coinbase is very well versed with distribution games. Base is already killing it in the list of L2s making $6.98M in profits in May. They also allow you to now text 100+ tokens.

😞 Vitalik expresses his disappointment with the aimless celebrity meme-coins “experimentation” cycle. (aimless as in Financialization *as the final product*). He went ahead and posted a summary of features that a celebrity crypto project needs to have for him to be more willing to respect it.

🛍️ After Mir and Hermez, Polygon acquires Toposware making their total investments in zk ~$1B. zk is essential for Polygon’s current narrative of aggregated blockchains and they know that they need all that is out there.

💥 Brian Guan, co-founder of the streaming app Unlonely, shared on Twitter how they accidentally pushed the private key to GitHub getting $40k drained from their wallet. (be careful out there, sometimes you can do dumber things than you think you can do)

🛠️ Dev & Design Digest

✍🏻 One of the hardest things in Computer Science is naming things. Here are some Best Practices For Naming Design Tokens, Components And Variables.

🔎 Turso brings basic Native Vector Search to SQLite. They have a fork of SQLite named libsql, where they added data types like vector and embedding and some utility functions to play on the same with.

🎊 TypeScript 5.5.0 beta fixes the type inferences of Array after

.filter.

Source: Egoist on Twitter 🧾 Another case of managed deployment services giving unusual (but expected, no?) amounts of bills. Jingna Zhang built Cara, a portfolio & social platform for artists, and the app quickly gained users and made it to the top 5 in the US App Store, reaching 500k users. But they were still running on Vercel functions. Last week back they also shared that “they had to upgrade our servers 7 times and will have to pay $13,500/month just to cover our database bills”. On Jun 6th, they got a bill of $96.28k, which is a good reminder to move your services to Cloud Provider when you get traffic and avoid shooting yourself in the foot.

What brings us to awe 😳

🌤️ ShadeMap.app maps every mountain, building, and tree shadow in the world simulated for any date and time.

💫 Astronomers reported a potential Dyson Sphere 1,000 light years away from Earth. [a quick primer on Dyson Sphere]

🕶️ SpaceTop by Sightful is a truly “screenless” laptop. It is priced at $1900 and runs on top of a custom spatial OS called spaceOS. You can reserve it for $100 from their site.

Today I (we) Learnt 📑

🛰️ A little late to party maybe, but we learned that “Voyager 1 was launched after Voyager 2”. Just 15 days after its successor. It was because Voyager 1 was launched on a faster and shorter trajectory and needed to be put on a path to reach its planetary targets.

🪪 Sybil attack is named after the subject of the book Sybil, a case study of a woman diagnosed with dissociative identity disorder.

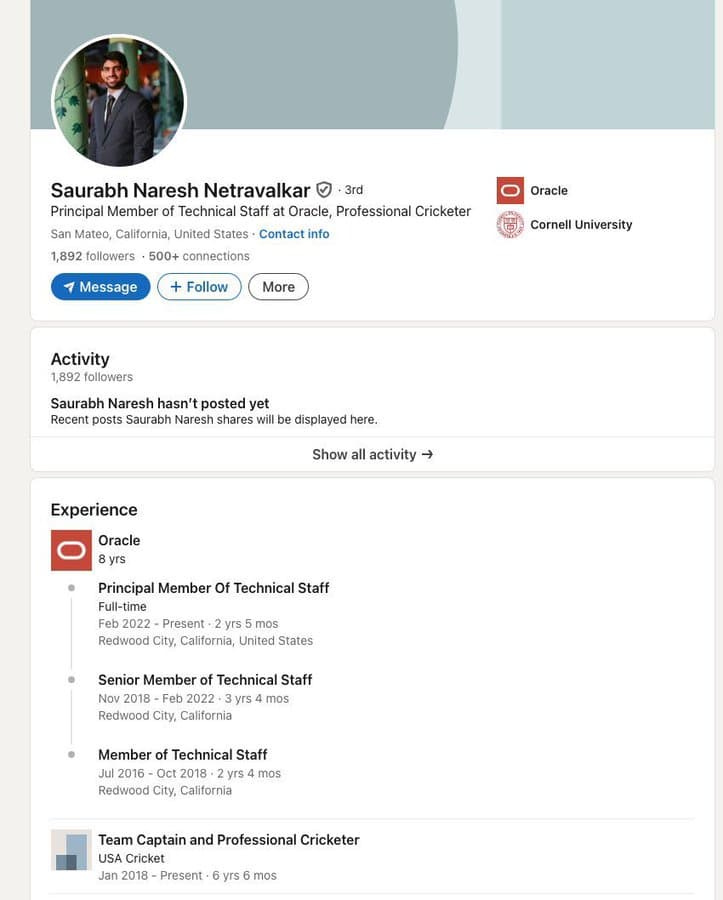

🏏 A USA Cricket World Cup Team member that beat Pakistan recently has a full-time job as a Principal Engineer at Oracle. Let that sink in.

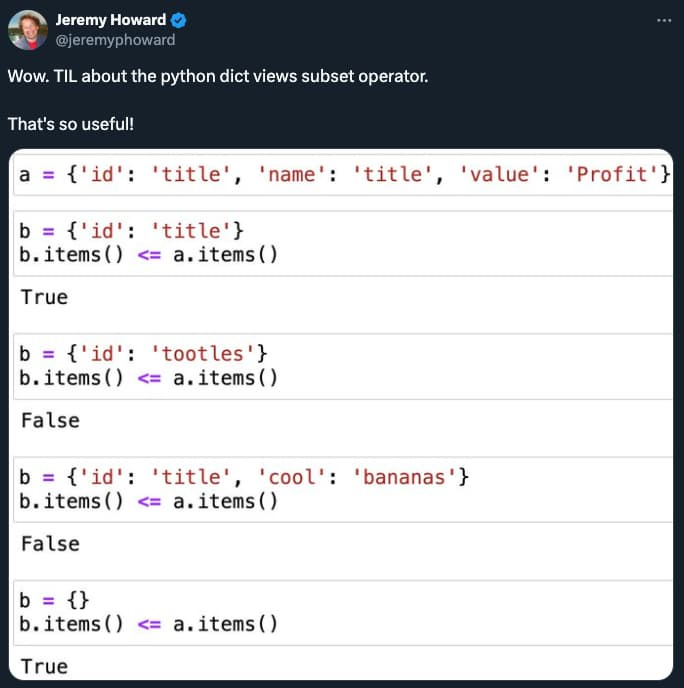

Source: Tweet by gaut 🔎 Python dictionaries can use the Python subset operator

Source: Tweet by Jeremy Howard

🤝 You have read ~50% of Nibble, the following section brings tools out from the wild.

What we have been trying 🔖

📧 Email.ml: Minimalist temporary email, valid for 1 hour.

🍱 omakub: An Omakase Developer Setup for Ubuntu 24.04 by DHH (should bookmark this if you are planning to fresh install Ubuntu)

🎞️ dupephotos: relevant royalty-free imagery (rare eh!?)

💪🏻 PostHog’s guide to hiring and managing “cracked engineers” (TL;DR → let them cook)

Builders’ Nest 🛠️

🔎 Tantivy is a full-text search engine library inspired by Apache Lucene and written in Rust.

📊 Univer: an open-source alternative to Google Sheets, Slides, and Docs.

⤵️ fetch-in-chunks: A utility for fetching large files in chunks with support for parallel downloads and progress tracking.

🏓 paddler: Stateful load balancer custom-tailored for llama.cpp

Meme of the week 😌

Off-topic reads/watches 🧗

👨💻 Managing My Motivation, as a Solo Dev by Marcus is an excellent read about maintaining motivation as a solo developer.

👀 Twitter founder Jack Dorsey warns social media algorithms are draining people of their free will and Elon Musk agrees with him. The only answer, in Dorsey’s view, is to create a marketplace of algorithms that return choice to users about which black boxes they find the most trustworthy - be it the plain ol’ “latest” feed or some complex algorithm custom-designed by them.

Wisdom Bits 👀

“A thousand moments that I had just taken for granted—mostly because I had assumed that there would be a thousand more.”

— Morgan Matson

Wallpaper of the week 🌁

🌌 Grab the week’s wallpaper at wow.nibbles.dev

Weekly Standup 🫠

Nibbler P is enjoying his new batch of light-roasted coffee after hitting a new record in his running distance this week.

Nibbler A was working hard and adulting Max at home this week. He added tons of books and shows to his inert to-do list.

If you liked what you just read, recommend us to a friend who’d love this too 👇🏻

There is also an internal LLM pricing war going on in China between AI companies on who can make their models better and serve them cheaper.